The EU AI Act in practical terms

What it means for engineering managers and tech leads

The EU AI Act (AIA) is about engineering practices, not law

The EU AI Act (AIA) imposes obligations on every company offering (or leveraging) AI-based products and services in the EU market, whether it is located in the EU or not. It may seem that compliance is merely a legal “checkbox” - call the lawyers, get the paper work done, that’s it. In reality, complying with the AIA means to change your company’s engineering practices.

Practical implications for engineering teams

In this article, I will briefly outline the implications of the EU AI act in practical terms. My background is in software engineering, both as an IC and as a leader, and I am passionate about making technology useful. So I am always looking for the practical side of things and how to get stuff done efficiently. This article is written in this spirit.

The AIA is a 150-page document, and so this one article can be no more than a starting point. I will write more about this. Please comment if you find this useful and what aspects you like me to focus on next.

Making AI systems safe to use

The EU AI Act's (AIA) key aim is to ensure AI systems are safe to use. While prohibiting potentially harmful systems (e.g. biometrics in public spaces), it takes a risk-based approach and establishes a set of practices required to make AI systems safe.

What it means in practical terms

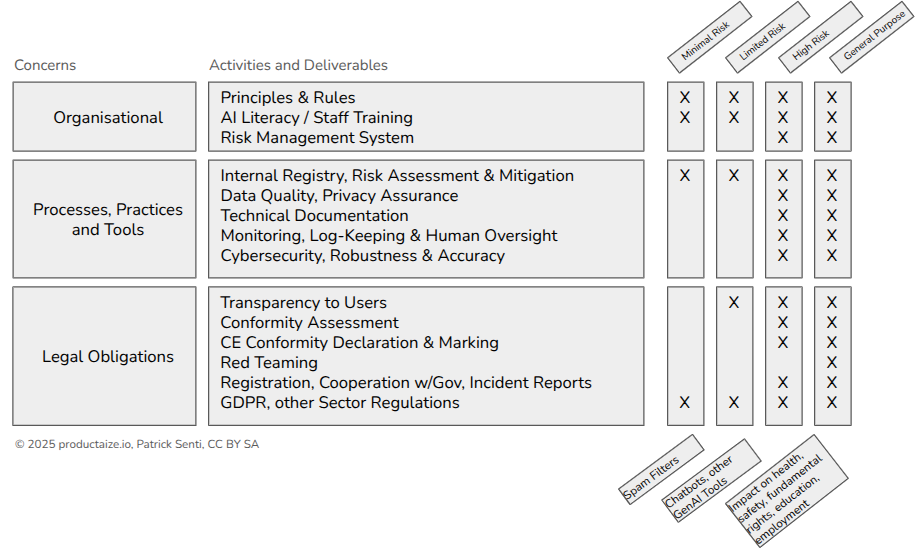

Despite ensuring that your AI system is not prohibited, the AIA requires a set of practices depending on the system’s risk level. An AI system's risk is considered to be either minimal (e.g. spam filters), limited (e.g. chatbots) or high risk (e.g. AI used in education, employment, life insurance and credit scoring). General Purpose AI (e.g. ChatGPT) is not a risk-level but a specific type of AI; it generally requires the same set of practices as high-risk systems.

Most of the following requirements apply to high-risk systems only. Those marked by an asterisk (*) apply to all AI systems. See the graphic for a visual breakdown.

Organisational

required at the organisational level

Principles & Rules* - use of AI systems for company use, scope and limitations

AI Literacy & Training* - who needs which qualifications (users, developers, operators, management and oversight)

Risk Management System - company wide processes to be followed to assess and mitigate risk

Processes, Practices & Tools

required at the system level

Registry, Risk Assessment & Mitigation* - every AI system used is known, assessed in terms of risk level, and risks are mitigated

Data Governance, Privacy Assurance - legal use, quality and lineage managed & decisions are documented

Technical Documentation - comprehensive documentation of uses cases, methods, testing, dependencies and limitations

Monitoring, Log-Keeping & Human oversight - systems use is continuously logged and analysed for issues, human operators can stop the system easily

Accuracy, Robustness, Cybersecurity - testing to establish safe operation*

Legal Obligations

required at the system level

Transparency to users* - make users aware of the use of AI

Conformity assessment - document the system conforms to legal requirements

CE Conformity declaration - user-visible declaration of the conformity

Red Teaming - test the robustness of the system to avoid misuse

Registration, Incident reporting - register with regulating body, timely reporting of issues

GDPR, sector regulations* - adhere to all applicable legal requirements

Disclaimer: The information provided in this article is for general informational purposes only and should not be considered as professional or legal advice.